Multi-agent Reinforcement Learning

We work on multi-agent reinforcement learning (MARL) algorithms for coordinating agents in cooperative tasks, such as search-and-rescue, surveillance missions, and warehouse robotics.

This work was funded in part by ARL W911NF2020184, NASA 80NSSC23M0221, and ONR N00014-20-1-2249.

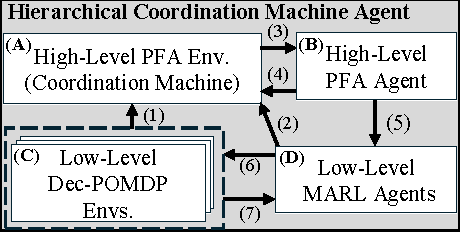

Coordination machines for minimizing communications in MARL

This work proposes coordination machines (CMs), a hierarchical model for minimizing communications in MARL subject to a desired overall task success rate. Our key idea is that each subtask may require different levels of communication, and varying the level of communication allowed for each subtask can lower overall communication costs. See our CoCoMARL RLC 2024 Workshop paper presenting our method for more details.

Addressing multi-agent credit assignment with successor features

This work leverages successor features (SFs) to disentangle an individual agent’s impact on a shared reward from that of all other agents, thus addressing multi-agent credit assignment when training decentralized agents. The video shows an example of gameplay from the Starcraft Multi-Agent Challenge (SMAC) where the red agents learned to surround the blue agents and win the game, despite being at a numbers disadvantage (27 vs. 30 agents). See our AAMAS 2022 paper presenting our approach, named DISentangling Successor features for Coordination (DISSC), for more details.